I like to mark special, recurring daily moments by having every Sonos in my home play a short audio file, like having Fred Flintstone announce the end of the workday.

I’ve had this capability for years, but my original implementation was retired along with my lightswitch.

My requirements were fixed already, since I was just porting existing functionality to Kubernetes. But thinking about the requirements as if I was starting from scratch led me to a cleaner, Kubernetes-native design.

Requirements

Sonos devices publish a SOAP API for playback of arbitrary media files. The caller passes a URI to the desired media file. I don’t have or want a dedicated media server. In the old implementation I ran an Apache web server on an Ubuntu host, serving up just one or two MP3 files as chimes.

Requirement: Media hosting

When playing the chime, I’d like to pause whatever is currently playing, play the chime, and resume whatever was playing previously, exactly where it left off, at the same volume.

Requirement: Playback with interrupt/resume

The chime should play on every Sonos (called Zones in Sonos-land) on my network. They may not all be in the same group (AKA Zone Group).

Requirement: All Zones

Finally, the chimes must be schedulable. I want to run them at specific times, maybe on specific days, and so on.

Requirement: Scheduled Chimes

Design

Implementation

Media Hosting

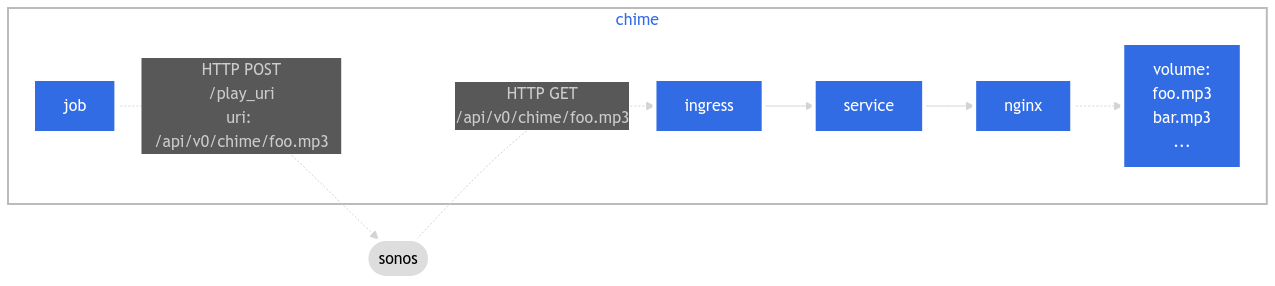

Ideally my whole implementation should be running in Kubernetes, for simplicity and so I can declare my whole app as IaC. In that case, it’s pretty straightforward to create a Persistent Volume, preload it with my chime MP3 files, mount it into an otherwise unmodified nginx pod, and publish that as an ingress on my cluster.

This architecture is actually an example in the Kubernetes documentation, signaling that my design resembles best practice.

Enable storage

I enabled the hostpath storage microk8s addon to host my Persistent Volume.

Then I wrote the Pulumi code.

Claim storage

Declare the PVC:

kubernetes.core.v1.PersistentVolumeClaim(

NAME,

metadata=homeslice.metadata(NAME),

spec=kubernetes.core.v1.PersistentVolumeClaimSpecArgs(

access_modes=["ReadWriteOnce", "ReadOnlyMany"],

resources=kubernetes.core.v1.ResourceRequirementsArgs(

requests={

"storage": "256Mi",

},

limits={

"storage": "256Mi",

},

),

),

)

Declare a Volume and a Volume Mount:

volumes = [

kubernetes.core.v1.VolumeArgs(

name=NAME,

persistent_volume_claim=kubernetes.core.v1.PersistentVolumeClaimVolumeSourceArgs(

claim_name=NAME,

),

),

]

volume_mounts = [

kubernetes.core.v1.VolumeMountArgs(

name=NAME,

mount_path=pvc_mount_path,

read_only=True,

),

]

Use the Volume and Volume Mount in my nginx Deployment. Along the way I refactored my Pulumi code, since I was repeating some patterns across my various deployments. So now, in my codebase, declaring a Deployment is really simple.

homeslice.deployment(

NAME, nginx, ports=ports, volumes=volumes, volume_mounts=volume_mounts

)

Add files

I had a couple of choices to get my chime MP3 files on to the Persistent Volume.

I chose the first option, temporarily mounting the Persistent Volume as read_only = False, then using kubectl to copy mt chimes to the PV.

% kubectl cp whatever.mp3 $POD:/usr/share/nginx/html -n homeslice

While messing around in the back end storage layer of microk8s sounds fun, practicing kubectl cp is a more widely applicable operation worth practicing.

Ingress

Setting up the ingress required an ImplementationSpecific rewrite-target rule. I want to serve my chime MP3 files from /api/v0/chime, to maintain consistency with all the other paths hosted on my cluster, but the Persistent Volume didn’t have that directory structure. I could have simply created an /api/v0/chime directory on my Persistent Volume and dropped the MP3 files there, but it was more fun to learn how to configure Rewrite Targets.

Playback with interrupt/resume

I already had an implemetation for this, written in Python. So far I had no Python code running in my home Kubernetes cluster, which simplified/standardized my build system, since I only had to support Go.

Unfortunately, there are no well maintained Go libraries for Sonos. The gold standard remains the Python library SoCo, which my original implementation used.

So, I could reuse code, which is great, but I’d have to introduce Python into my ecosystem.

The SoCo library is quite nice, very well tested by other folks and myself, and provides a very clean snapshot feature that implements interrupt/resume.

This line ties it all together:

zone.play_uri(args.media_uri, title=args.media_title)

As far as I can tell, the title argument has no effect, but everything else works as expected.

All Zones

Previously, my Python playback/resume implementation discovered all Zone Groups (groups of Sonos devices) on my network, looped through the groups, and played the chime on each device in the group.

For the new implementation, I’d solve that problem differently. I was planning to use a Kubernetes cronjob to schedule the chimes. I could use one cronjob for each Zone Group (group of Sonos devices).

Aside from simplicity, I faced a practical challenge: how could I discover Sonos devices on my network from inside a Kubernetes cluster? Device discovery is done with a UDP broadcast, so I’d need to route replies back to the pod that initiated the broadcast. How?

I could make something like this work using a NodePort, but what if I wanted to horizontally scale that deployment? Sure, my home network discovery would never need more than one pod, but I’m architecting here!

Ultimately, how I get the Sonos device IP addresses is orthogonal to the operation of chime.py, by design.

To keep things simple, I wrote a discovery script that I can run manually on my laptop, and set my device IP addresses as configuration.

Besides keeping my code simple, now I can omit Zone Groups just by leaving them out of my secrets file. For instance, if I don’t want to wake the chickens at when my 3AM Eternal chime plays, I can just omit the Chicken Coop Zone Group from my secrets file.

Later, if I want, I can go back down the rabbit hole and set up automatic device discovery, replacing my manual script but keeping everything else the same. That seems like a good design.

Schedule

Previously, I had one cron job set in a config file on my Linux server. It invoked my chime script. I reused the same basic idea, replacing the single cron job with multiple Kubernetes cronjobs.

Pulumi makes it easy and clean to loop through my list of chimes, each with its own schedule and media file, and my list of Zone Groups, creating a matrix of Kubernetes cronjobs to keep my home musical and its occupants aware of daily milestone moments.

# cronjobs schedule the chimes.

for chime in chimes:

for zone in CHIME_SECRET.ZONES:

name = make_name(NAME, chime, zone)

args = [

zone["ip_address"],

chime["media_title"],

chime["media_uri"].replace("", CHIME_SECRET.INGRESS),

]

homeslice.cronjob(

name,

image,

chime["schedule"],

args=args,

metadata=homeslice.metadata(name),

)

Nice and readable!

Refactor

By this point, my Pulumi codebase had accumulated two cronjobs, five deployments and services, and multiple volume mounts. Almost all of them were configured identically, so it was time for a refactor.

After the refactor, the whole buttons app is defined in a few simple lines.

homeslice.configmap(

NAME,

{

"CLOCKTIME_URL": clocktime_url,

},

)

env_from = [homeslice.env_from_configmap(NAME)]

ports = [homeslice.port(container_port, name="http")]

homeslice.deployment(NAME, image, env_from=env_from, ports=ports)

homeslice.service(NAME)

homeslice.ingress(NAME, ingress_prefixes)

I gave up a lot of Kubernetes-ish-ness in exchange for terse lines that nonetheless remain clear and obvious to someone used to Kubernetes.

During the refactor, I introduced Python linting and formatting with black and pylint, for both the Pulumi code and the new chime Python code. Linting made the refactor much easier. The linters pointed out areas of code with unnecessary complexity, and I focused on those first.

Bottom Line

There is no universe where an application this trivial needs to be deployed to Kubernetes. But deploying it to Kubernetes imposes some design constraints, and those constraints are informed by best practices that apply whether you’re expecting 10 million users or just 1. Redesigning my chime application within those constraints led to a cleaner, more extensible, shareable design.

Things that used to be implicit (the chime schedules, the location of the MP3 files, the version of the SoCo library, etc.) are now explicit and committed to git as configuration. There’s less guesswork about how things work, and boundaries are clearly defined.

Using separate cronjobs for each Zone Group replaced a Python for loop with Kubernetes constructs. This makes the Python code simpler, leaving fewer dark places for bugs to hide. It also means I can alter which Zone Groups play which chimes, through configuration alone, without modifying application code. That’s more coherent, because which Groups play which chimes is configuration.

Best of all, it works!